Sumana Das, Pune

There might be a high possibility that the New York Times and OpenAI could end up in court. According to legal experts, The publication house is considering legal action against the artificial intelligence company to protect intellectual property rights when conducting ethical journalism.

Recently, there has been an air of tension between these two parties regarding the discussion about the negotiation terms of a license agreement. The agreement will require the tech company to compensate for the news from the Times publication they have incorporated into their AI tools suggestions and searches. The discussions, however, are going quite disputable, prompting the media publication to go for the legal procedures.

If such a situation arises, it will be a point of confrontation for the AI party to fall into the labyrinths of copyright protection in the generative artificial intelligence realm. This could end up as a high-profile legal tussle.

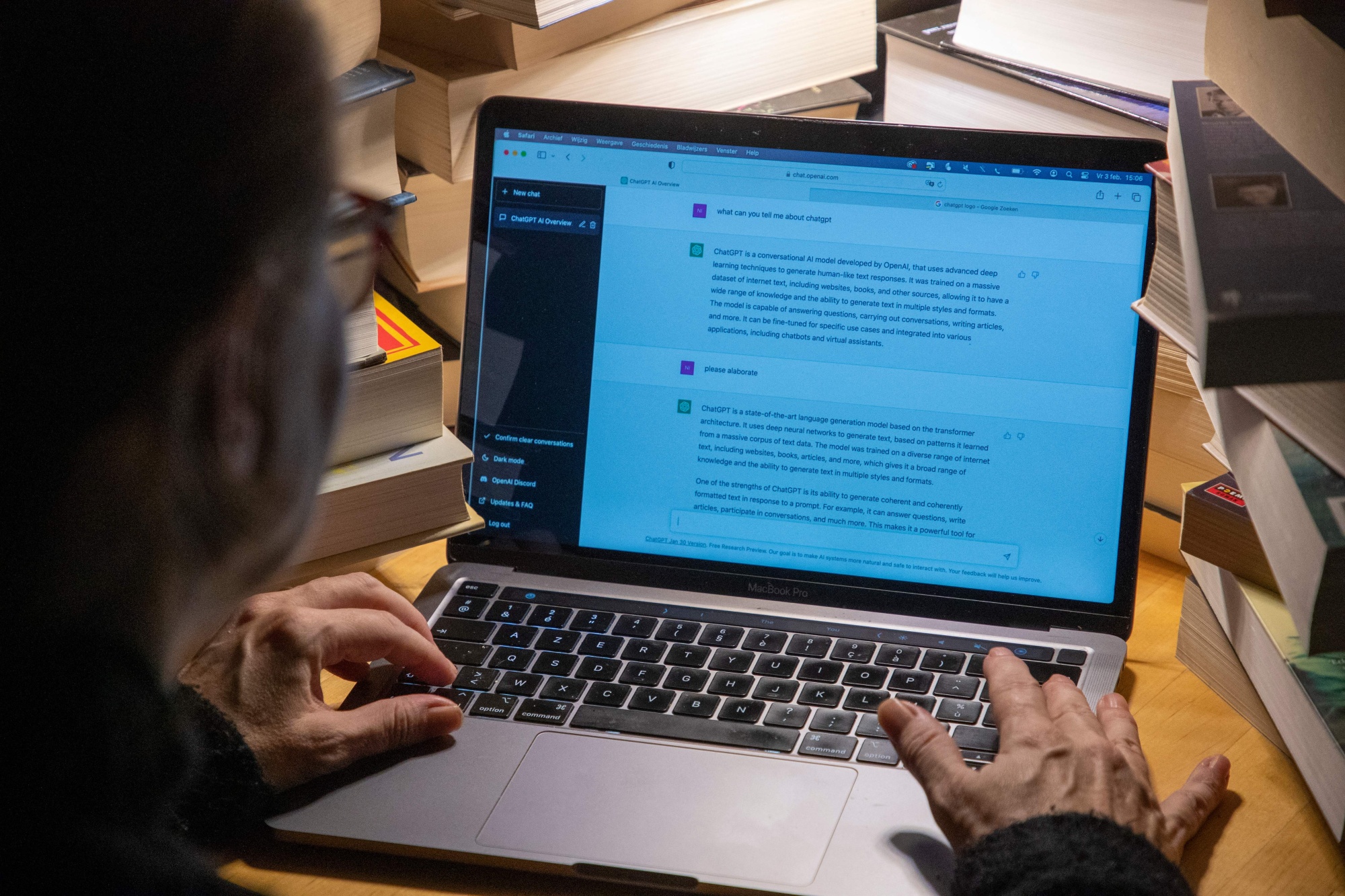

According to some confidential information, the tech joint is preparing to be in the field against this mainstream media house. This AI technology has often generated texts for queries from the reports originally reported and authored by the New York Times staff. The matter is exacerbated by various search engines including AI tools. For instance, Microsoft, a current major investor in OpenAI, has added chatGPT to the Bing search engine.

As a result, users searching online might be presented with texts that are initially from the Times. This could impact the readership of the digital counterpart of the publication. The majority of times, chatGPT does show the results gathered into a large data collection derived from the sources without proper explicit permission. During the process, if OpenAI is found guilty in a case of copyright violation, then there might be a situation where the federal law might pass a verdict to eradicate all the texts taken from different sources without permission. Federal law can determine that the chatGPT has unlawfully replicated the articles from the Times, breaching the copyright code. Therefore, the judiciary might ask the tech company to destroy the old dataset and build a new one based on the texts from the authorized sites.

Federal copyright law can also impose financial penalties, which can go up to $150,000 per willful act of negligence.

The discussion has been ongoing in the wake of the Times decision not to join the other media outlets that will come into negotiations with the tech firm to utilize their content in the AI development tools.

This case will be a part of the legal lawsuit series directed at OpenAI. Not only the media entities, but several public figures have filed lawsuits against the company for using their content without permission. Another tech firm, Stability AI, has been sued by Getty Images for allegedly training an AI model to use over 12 million Getty Images without permission. The legality of such practices is a big question.