Mannat Saini, Pune

A government advisory issued last week mandated that generative artificial intelligence companies seek permits before the deployment of “untested” AI systems. However, officials have clarified that this only applies to tech giants like OpenAI and Gemini.

The advisory, directed towards industry giants, directed that their services must not create illegal responses for Indian citizens. Keeping in mind the risks of deep fakes and misinformation, it also mentioned that the systems should not create resources that threaten the electoral sanctity of the upcoming parliamentary elections. It mandated that platforms that are currently under-testing or large language models (LLMs) should seek permission from the central government and be labelled accordingly.

The IT Ministry advisory faced backlash from startups in the field of generative AI as well as from investors who feared a regulatory overreach. The founder of Perplexity AI, Aravind Srinivas, termed the advisory a “bad move by India.”

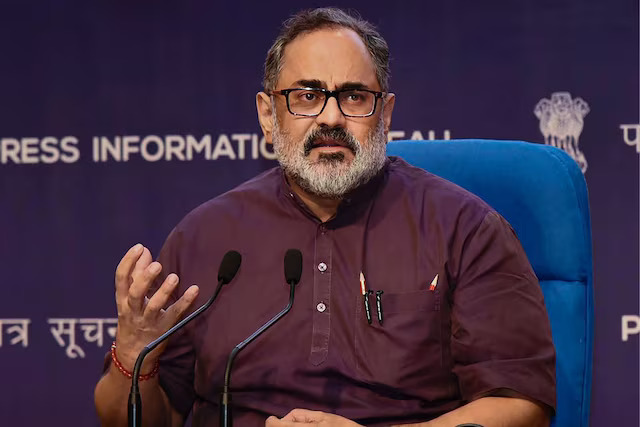

On March 4, the Minister for Electronics and IT, Rajeev Chandrasekhar, posted on X (formerly Twitter) to clarify, “The advisory is aimed at significant platforms, and permission seeking from MeitY is only for large platforms and will not apply to startups… It is aimed at untested AI platforms deploying on the Indian Internet”.

He mentioned that the labelling of under testing models, consent based disclosure, and permissions stands as insurance for users.

Legal experts have raised concerns about the basis of the advisory, questioning under which law the government could issue guidelines to generative AI. However, officials claim that the advisory was under the Information Technology Rules, 2021, and was sent as an act of “due diligence.”

IT Minister Ashwini Vaishnaw said that the advisory was not intended as a probable legal framework yet but was an effort towards testing and experimenting with AI models that were not fully developed.

Vaishnaw said, “Whether an AI model has been tested or not, whether proper training has happened or not, is important to ensure the safety of citizens and democracy. That’s why the advisory has been brought. Some people came and said, ‘Sorry, we didn’t test the model enough’. That is not right. Social media platforms have to take responsibility for what they are doing.”